So while the producer-consumer hybrid was born in the cauldron of photo collage, it has spent the last 100 years chewing its way into every corner of social, political, and artistic life. Even the process of writing this piece is enabled by the collage aesthetic. I’m clipping quotes from online resources, reorganizing my words on the fly, and pasting the product into a publishing platform. Think hip-hop and sampling, think Pride and Prejudice and Zombies, think inspirational speech supercuts, think every meme you’ve ever participated in, think social media platform interface design and development.

If this were a podcast, this is where I would insert the record scratch.

Because it’s not just the images we use to advertise our avatar-selves on Instagram, or even the functionalities and interfaces provided in the app that have taken on the cut-and-paste aesthetic, but the techniques used to make the the software itself. Instagram started out as a fairly simple photo feed but didn’t catch proverbial fire until it began directly copy-pasting features from its competitor Snapchat. Disappearing photos and videos sent to individuals or groups of friends, which later became public ‘Stories,’ face filters, and decoration with animated stickers all originated with Snapchat. (5)

This is not necessarily a value judgement against Instagram’s design practices, (I have plenty of beef with their choice to include intentionally habit-forming functionality, but not with its copying of competitors) but instead is meant to point out that collage is integral at all scales of techno-communication today. And it’s not just big platforms constructing themselves from bits and pieces taken from elsewhere. Software development these days never starts from blank canvas. There’s nearly always a library, API, or component system snagged from zee interonets or internal legacy code. And sure, yeah, maybe I’m stretching the concept of collage well beyond the confines of its original meaning, but it turns out language is made by those who use it. Get off my smoke-smothered sidewalk language police! (The Camp Fire still burns as I write this. Today the AQI in San Francisco is 265.)

Like evolution, which cannot jump from a present body format directly to the perfect ideal but instead must step blindly through a thicket of tiny mutations, technological change tends to improve incrementally as well. But then, we invented this thing called genetic engineering which allowed huge changes in genetic makeup that would not have been possible via evolution alone. Collage is the genetic engineering of technological innovation. Much the way working in diverse groups of people recombines their varied backgrounds and ways of thinking into more wide-ranging possible outcomes, collage expands the boundaries of what’s possible to create by bringing into contact material of various sources and types

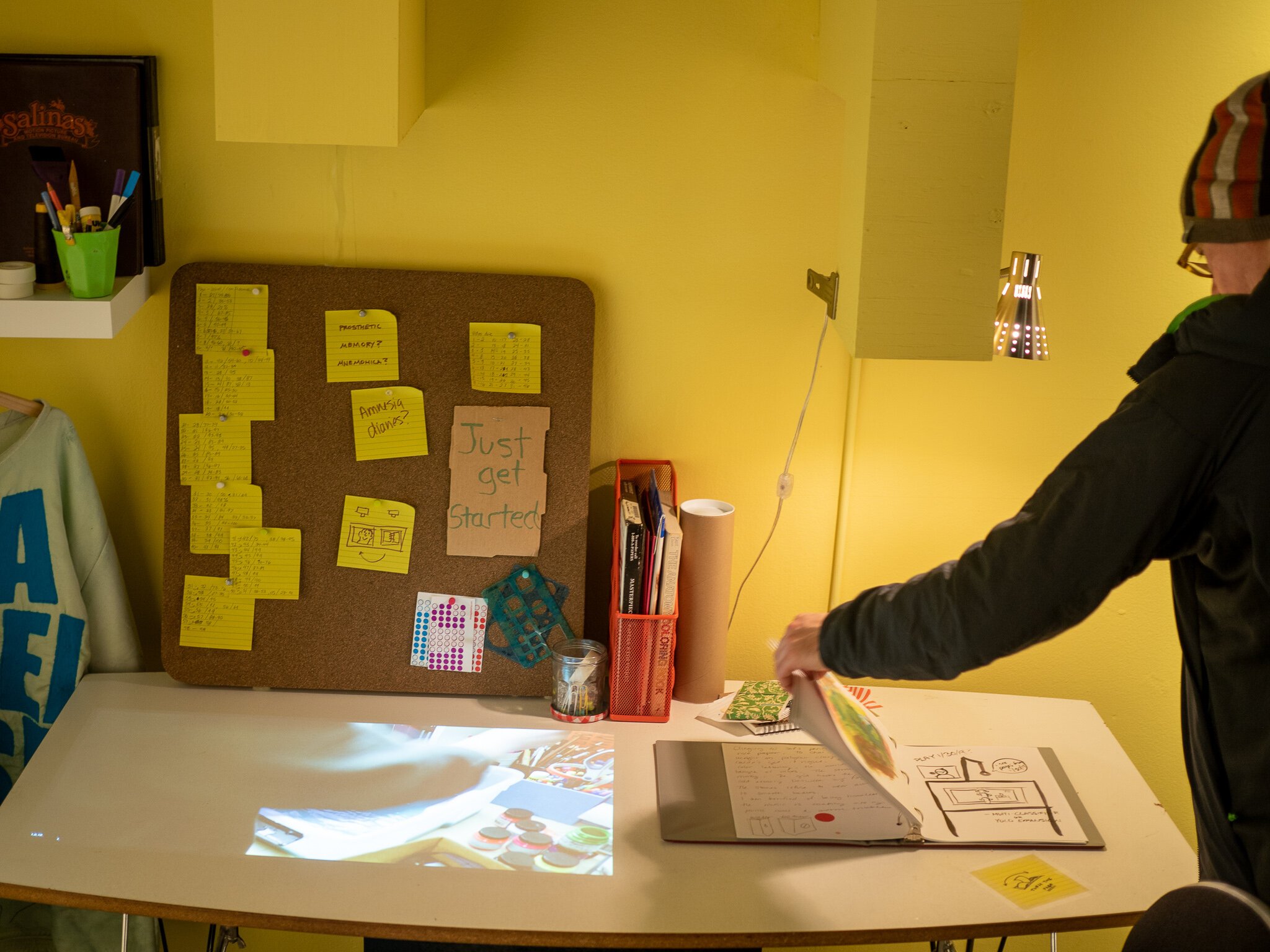

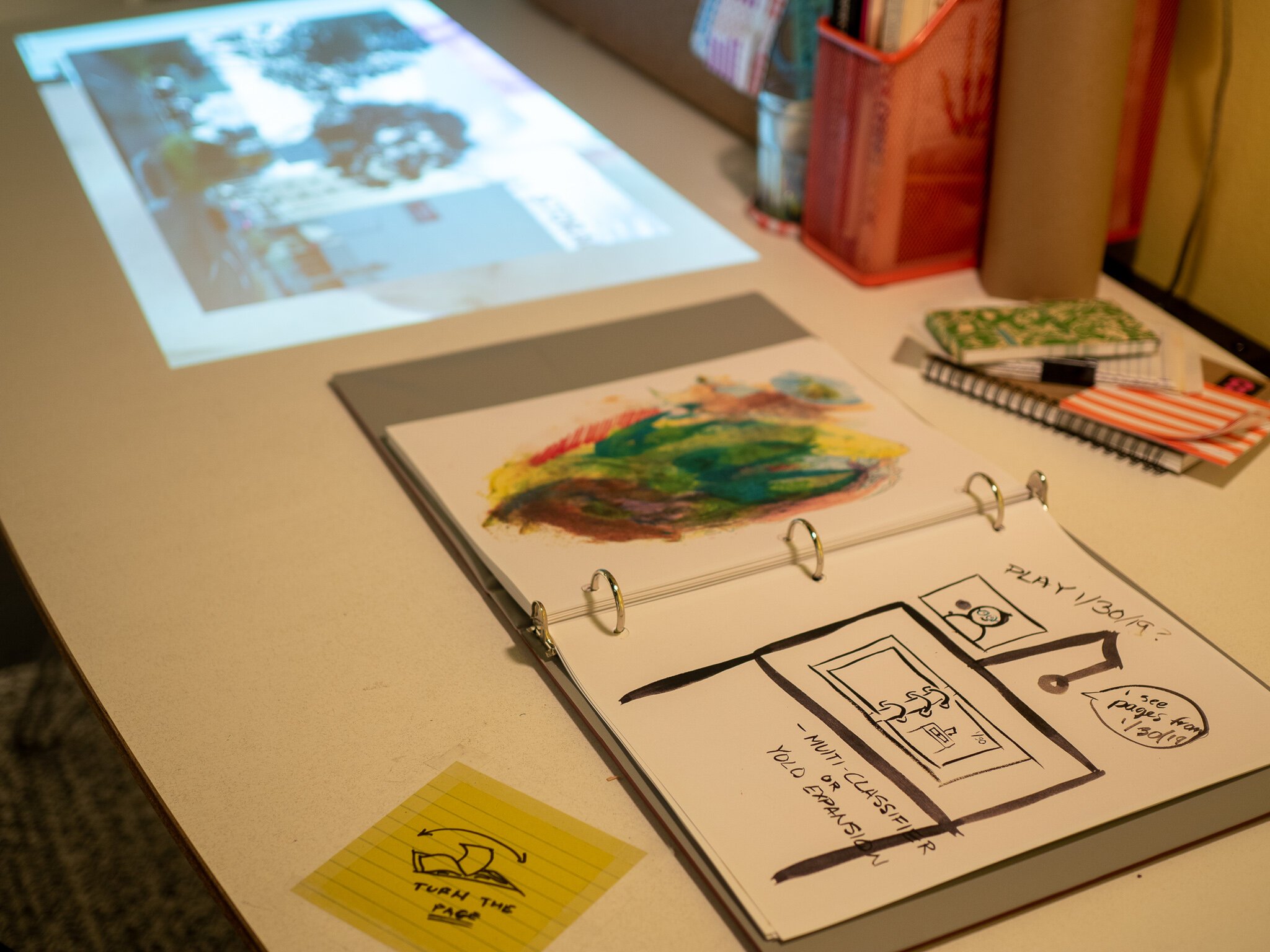

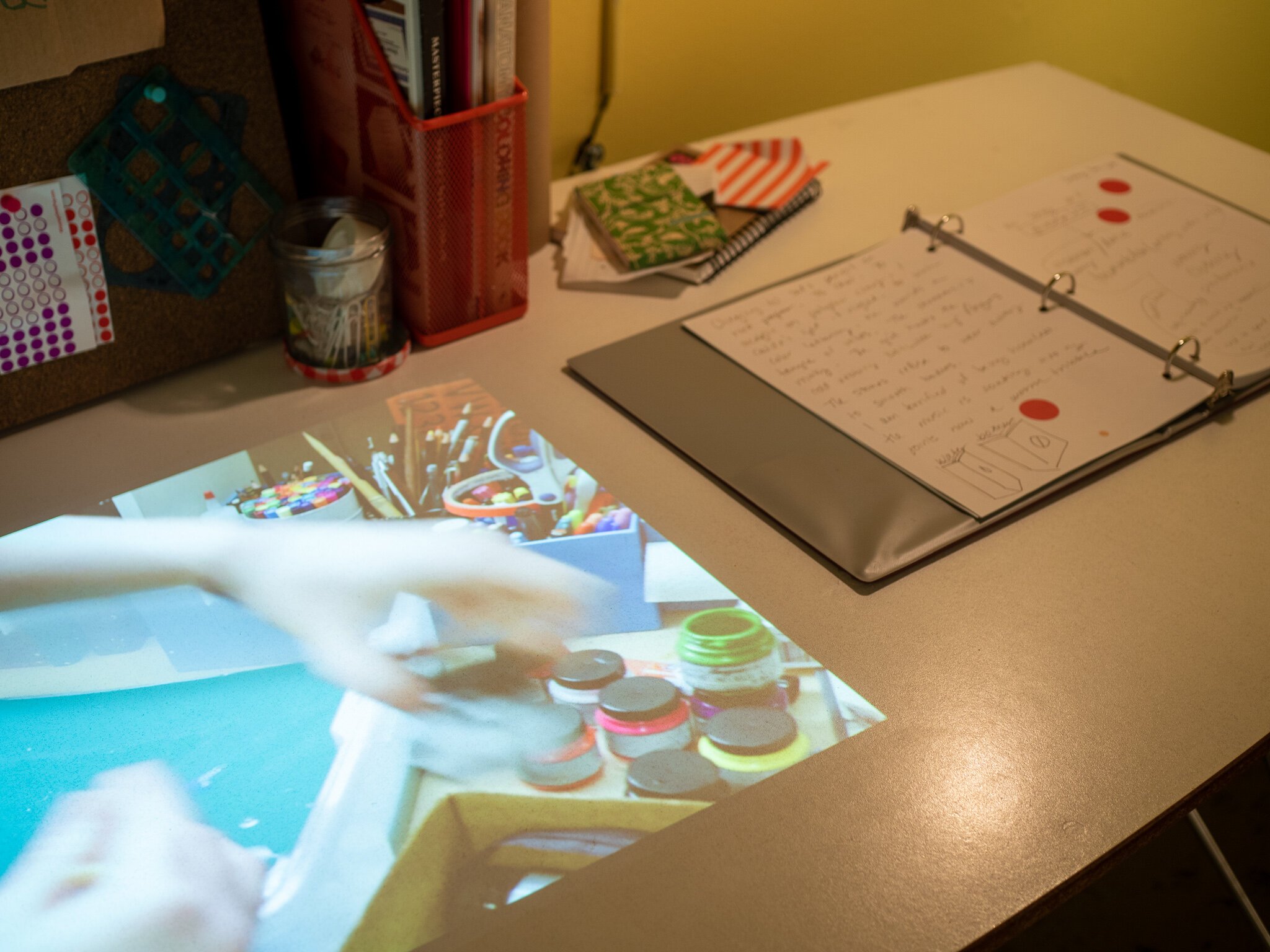

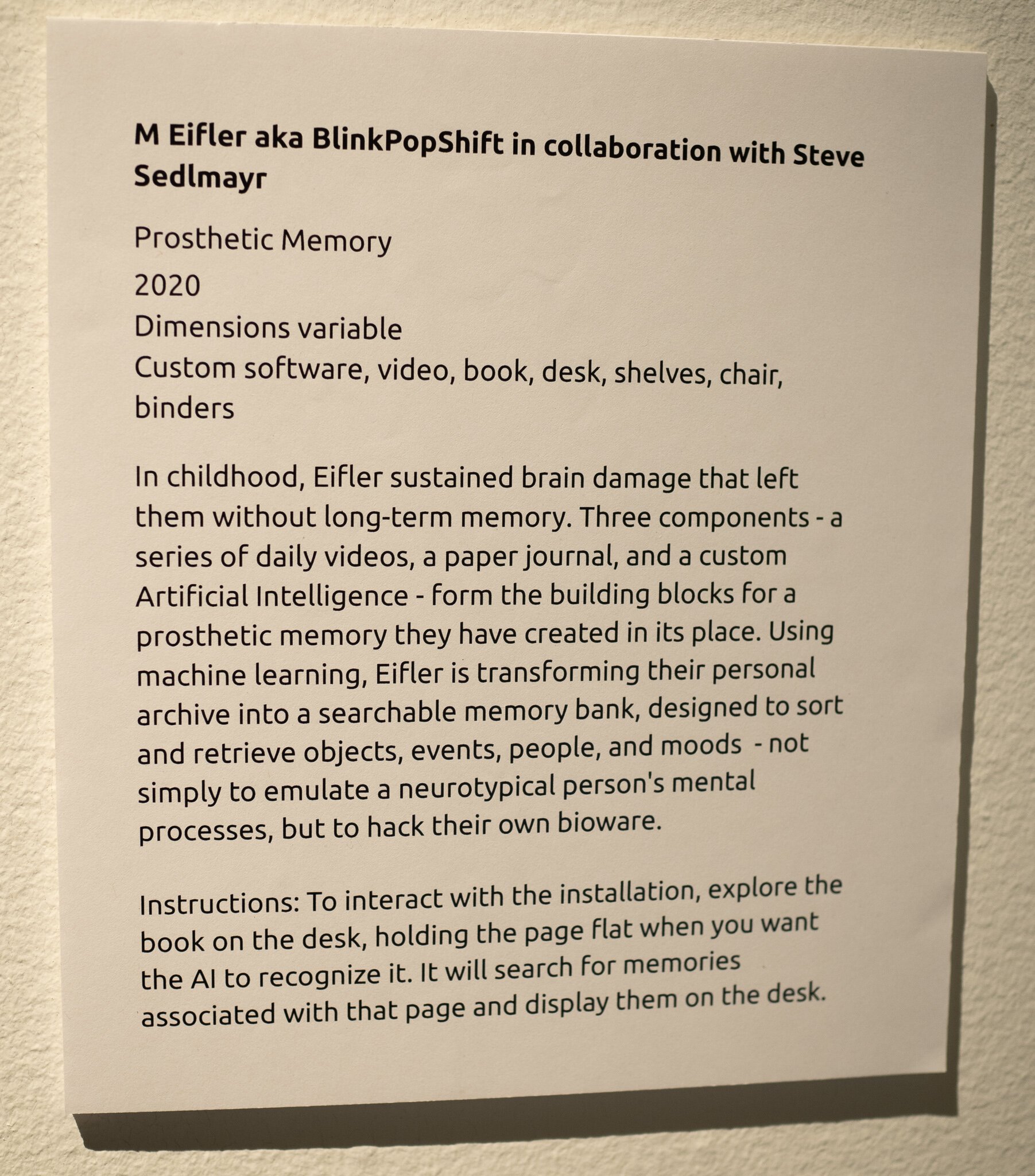

Art-based research for technological exploration, the style of research I specialize in and have done at SAP, Y-Combinator Research, and now Microsoft, leans heavily on collage as an inquiry technique. Rather than focus on incremental improvement, art-based research attempts to jump to far off, precarious, and often not-viable-at-present lily pads in order to broaden our menu of innovative avenues. Collage is a skill, an art, and the key to democratizing the future of AI.

Tomorrow: AI